Contents

By: Stijn Vermeeren and Joachim Ott

Artificial intelligence (AI) plays a role in more and more aspects of our daily lives. Automatic decisions made by AI systems influence what we see and get recommended, and in some cases even life- and career-changing decisions are made. Besides great advantages, sadly this also results in more people who are affected by unfair decisions made by biased AI systems, without having access to explanations on how these decisions were made.

The discourse around bias in AI can be a very polarising one. Many different concerns need to be balanced:

- AI systems can have an impact, not just on individual users, but also on society as a whole. It's our ethical responsibility to ensure that this impact is positive and inclusive, and that it does not amplify any inequalities or prejudices that might already exist in our society.

- On the other hand, excessive or improper regulations for AI systems can stifle innovation and give a competitive advantage to countries or organisations that do not have such regulations in place.

- While perfectly bias-free AI systems might never be achievable (after all, no human decision-maker is completely unbiased either), it is currently too easy to find problematic examples of biases in AI systems. There is a clear need for better solutions.

In this article, we discuss these considerations in more detail, and we explain how Starmind makes sure it deserves the trust that our users have in our product.

Examples of Bias

Wikipedia defines Bias as a disproportionate weight in favor of or against an idea or thing, usually in a way that is closed-minded, prejudicial or unfair. It is especially problematic if the bias discriminates along one or multiple of these lines: age, gender, religion, sexual orientation, race, nationality, etc.

Several examples of bias in AI systems have been widely reported by the media in recent years. Here are some examples:

- Advertisements for criminal background checks are more likely to show up when searching for names that are associated with a specific ethnic group (BBC)

- An AI system for reviewing job applications gives a higher score to male applicants and to resumes using more masculine language. (Reuters)

- Some photos of people with a darker skin are mis-categorized as "gorillas" (BBC)

- An image search for "CEO" shows mostly white male people (The Verge)

- An AI camera repeatedly mistakes the lineman's bald head for the ball in a Scottish football match (The Verge)

- Additional interesting cases are covered in a 2017 keynote talk by Kate Crawford

In traditional software development, any bias could be fixed by improving the design of the computer program. In AI and machine learning however, bias can have more subtle origins. In particular, not only the source code can be the cause of bias, but also the data used to train an AI model. We can only gather data from the world we live in, and that world has a long history of prejudice and discrimination, so consequently, most datasets will contain some form of prejudice and discrimination as well.

For example, suppose that an AI model is trained on biographies of historical figures, and that those biographies use the word "genius" more frequently for people of a specific gender. Unless specific debiasing techniques are used, the final model will most likely also contain (and potentially even amplify) the biased association between the word "genius" and the specific gender that exists in the training data.

Explicability of Decisions

When a human makes a decision that you don't understand, then you can ask them to explain their reasoning. When they are not able to do so, then you will likely remain skeptical about their decision. Ideally, you should be able to approach AI systems in the same way.

Users are more likely to trust and accept AI solutions whose decisions do not come out of a "black box", but rather can be motivated with additional explanations. These explanations also make the AI solution more accountable, as they make it easier to identify and fix any bias that might exist in the model.

Regulating AI Systems

We defined bias as a disproportionate weight in favor of or against an idea or thing, usually in a way that is closed-minded, prejudicial or unfair. However, as Abebe Birhane (one of the leading voices on ethical AI) writes: concepts such as bias, fairness and justice are moving targets that depend on the historical and cultural context. This means that removing bias from AI systems is not an exact science. No solution can ever be completely and eternally bias-free. But also: not all types of bias are equally harmful. This depends not only on the AI system by itself, but also on its practical application (how the AI impacts the world around it).

As a consequence, regulating AI systems is a difficult but also important task. The new AI Regulation, proposed in April 2021 by the European Commission, tries to handle this by taking a risk-based approach.

- Low-risk applications are only lightly regulated.

- Most rules only apply to high-risk applications, for example applications that can influence who has access to health care, or applications that are used by law enforcement for criminal profiling.

- AI applications that pose a threat to fundamental human rights are completely banned.

Because this is one of the very first attempts at regulating AI, we believe that a lot will still change over the coming years. However, the regulation proposed by the European commission already acknowledges two critical points:

- AI can bring huge benefits to society, when used in appropriate, human-centric ways.

- Requirements around bias in AI systems need to be proportional to the risks associated with the application.

Checklist for AI solutions like Starmind

How do you control for bias when you don't develop AI solutions yourself, but rather buy them from vendors such as Starmind? The following checklist, based on academic publications by Krishnakumar and Stilgoe et al., summarises the most important points to consider, and explains how Starmind deals with them.

Justification

Do the benefits of using an automated AI-based solution outweigh the risks?

Starmind increases productivity by letting knowledge flow freely across existing organizational, geographical and language barriers. This is measurable: Starmind provides metrics on how much time users save by getting the right answer in a short time.

The use of AI is essential to Starmind. The resources required for manually creating and maintaining an equivalent database of "who knows what" within an organization, would be prohibitive. Incorrect associations made by Starmind's AI can easily be rectified without having a harmful impact on the user (see section on "Anticipation" below). The use of Starmind is therefore justified and beneficial to organizations and their employees.

Explanation

Can the AI model give reasonable explanations that help to understand its decisions?

Starmind's AI is trained to make decisions such as:

- Who is most likely to give a good answer to a question on a certain topic?

- What are the most relevant topics to summarize the skills and knowledge of a user?

Starmind puts a high value on being able to explain these decisions. Starmind's architecture helps to ensure this explicability at a fundamental level:

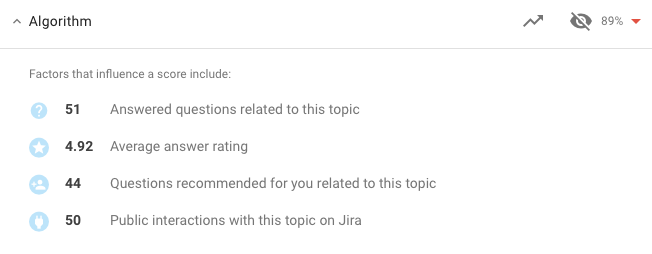

- All topics have concrete, interpretable, human-readable labels.

- Starmind can trace every connection between a user and a topic back to its source(s), such as a well-rated answer posted on Starmind, data from a specific connected learning source, etc. For each topic, an overview of these sources is shown to the user.

An overview of how a Starmind user is connected to the topic "Algorithm",

as it is shown to the user in Starmind's user interface.

Anticipation

Does the AI vendor have a channel where you can report suspected biased decisions, as well as proper processes to respond to such cases?

The Starmind user interface contains a feedback form where users can report issues directly to Starmind. Starmind has an in-house Data Protection Officer and a Customer Success team, who will follow up on any issues reported by end-users and customers.

Additionally, users can directly remove unfitting topics, and manually add aspirations and interests to the system. Users who receive a request to answer a question can decline this request, thereby informing the system to select different experts for similar questions in the future. Questions can also be answered by users who were not automatically selected by Starmind, which increases the probability for them to be selected as topic experts in the future.

Reflexiveness

Does the AI system collect and process data in a transparent and robust way? Is the system able to cope with changes, limitations and inaccuracies in the data?

Starmind learns from the topics that users interact with in their daily work. The expertise model in Starmind adapts automatically to any change, for example when a user moves to a different role. Newer interactions are weighted more strongly than older interactions. This is well-balanced, allowing Starmind to acknowledge long-standing subject experts, while quickly recognizing newcomers as well.

Company-internal terminology, project names and any other specific wording is automatically recognized by Starmind, without any need for manually adding these terms to a database.

Responsiveness

Are the benefits and risks of the system potentially affected by external factors (for example changes in society)?

Starmind has played a crucial role in supporting its customers in dealing with major societal changes, such as the shift to more working from home. Without the possibility of asking a colleague on the next desk, employees used Starmind to get the answers they needed from experts in a minimal amount of time. For many organizations, the Starmind application also helped to deliver a clear communication around the coronavirus pandemic to all employees.

Auditability

Can the training data, pretrained model components and algorithms used in an AI system be verified? Is documentation available to the client / user?

The data Starmind uses to detect expertise is provided by the customers themselves, hence can be audited any time.

Starmind's language processing pipeline builds on top of well-established algorithms and models that continuously undergo rigorous audits against bias, not just by Starmind, but also by independent research institutes worldwide. For example, we use Word Embedding Association Tests (WEAT) to benchmark our automatic text comprehension components for different types of bias, such as gender and age biases.

Clients have access to documentation of all aspects of the product, including the algorithms.

Conclusion

AI systems are impacting the lives of millions of people every day, so bias matters. Thankfully, awareness of the different types of bias that can exist in AI solutions is increasing. New methods for discovering and mitigating bias are discovered on a daily basis. New regulations, such as the one proposed by the European Commission, are trying to strike a balance between supporting the huge potential benefits of AI, whilst putting stricter requirements on higher-risk applications.

Starmind fully supports this trend. We keep track of the latest methods to detect and mitigate bias, and apply them to our AI models and datasets. Because for us, making our AI fair and human-centric is not just an afterthought, it is at the core of our mission.

About the authors

Stijn Vermeeren is the Head of AI at Starmind.

Joachim Ott is a Data Scientist at Starmind. He is also a PhD student at the Institute of Neuroinformatics (INI) of ETH Zurich and University of Zurich (UZH).